I don’t mean to be too inflammatory here but let’s face it, when a set of guidelines that was agreed upon across specialties, that’s fairly easy to memorize (only one variable), and that was published some 10 years ago STILL doesn’t have it’s recommendations routinely reported in the majority of radiology reports, well, we’ve got a problem.

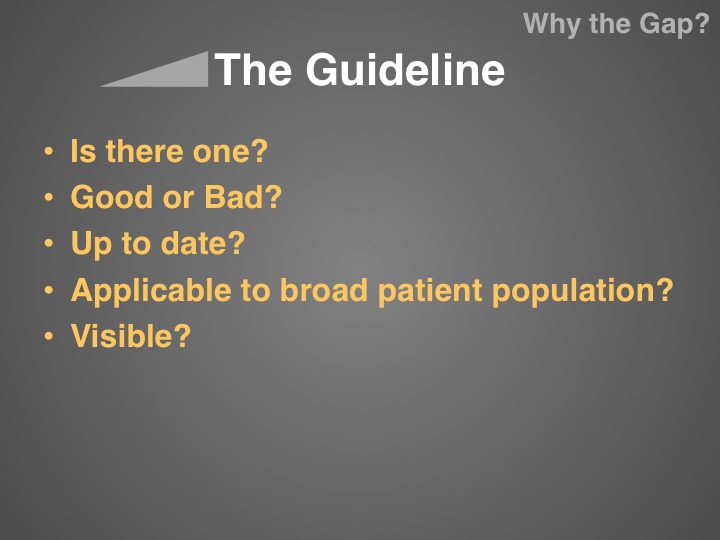

And I don’t place the blame at the feet of the Fleischner Society. Rather it’s the guidelines themselves…all guidelines…and the Fleischner Society guidelines are just the most obvious example. Guidelines exist to be adopted and affect care on a large scale. They create a sort of “magnetic line” to practice around. Unfortunately most published guidelines just don’t accomplish that goal in a timely or effective manner…so that is what sucks!

At the recent RSNA, we presented our experience launching RadsBest, and some of what we’ve learned from our many users. I think the most important thing that we learned is that many of these guidelines…well, they are just not being adopted in the way that they could be, and there are important reasons why they aren’t.

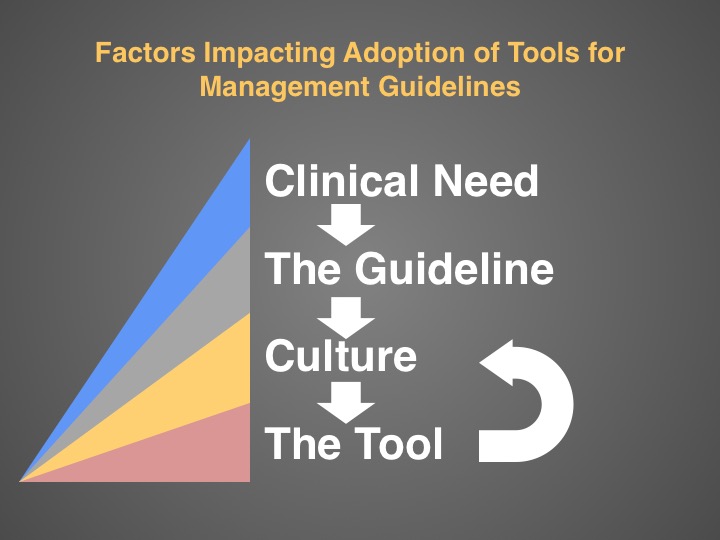

Here is a portion of the presentation that we made. It contains our breakdown of the factors that have affected the adoption of clinical management guidelines like the Fleischner Society Criteria and ACR Management Guidelines for Incidental Findings.

Read on.

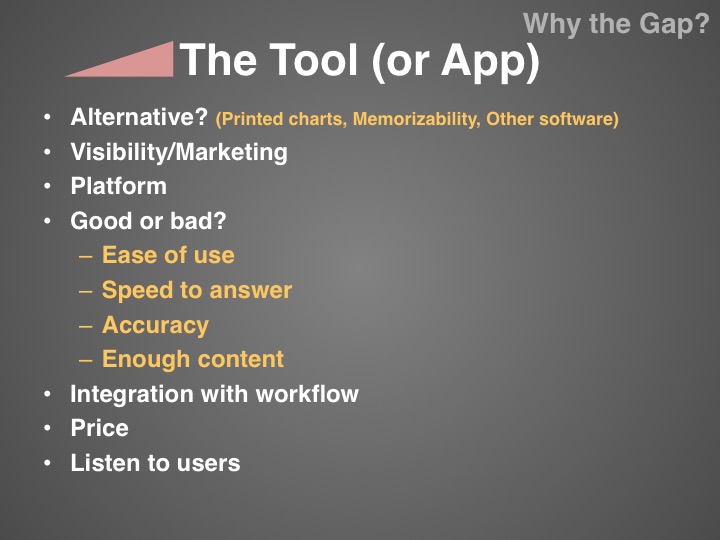

When publishing guidelines for clinical management, there is a constant tension between creating something that is useful on a practical level (i.e. an algorithm that is not too onerous to memorize or navigate) and something that is applicable to a wide variety of patient populations and that provides enough complexity to optimize care for each individual patient. Unfortunately when you publish such a guideline, you often have to make tradeoffs between the two. Decision support technology, like RadsBest, is able to eliminate that tension between the usable versus the accurate by putting the complexity in the backend. It allows creation of algorithms that are BOTH Usable AND Accurate.

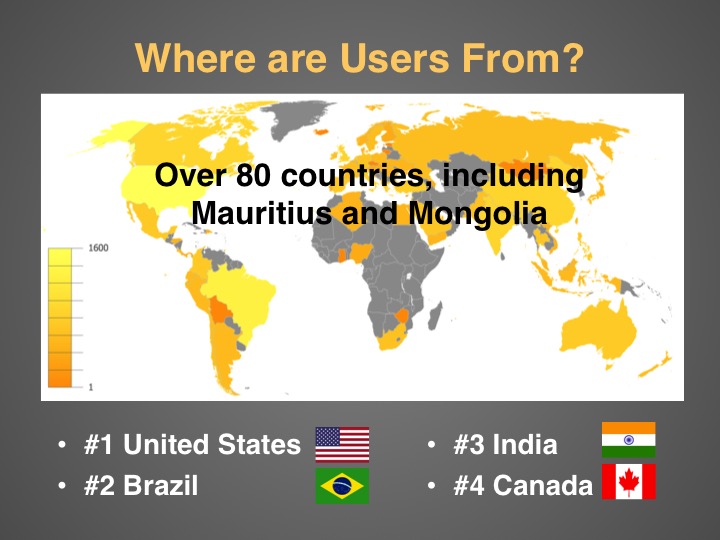

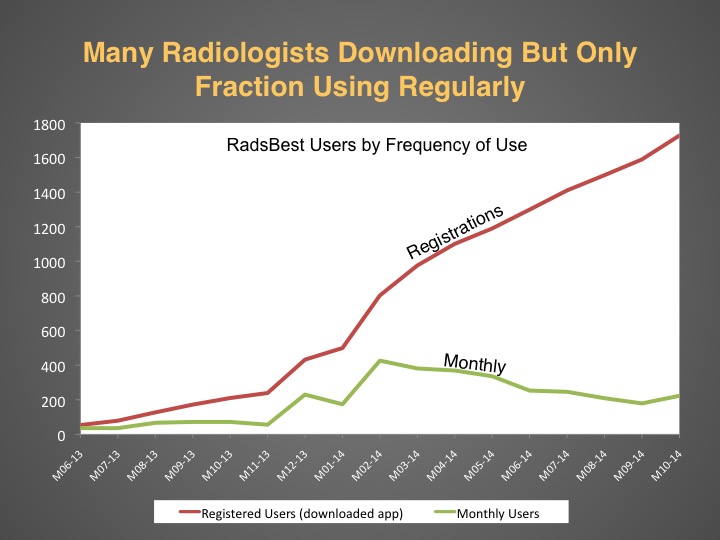

As of November RadsBest has had downloads from around the world. There were just under 2000 registered users…even more now.

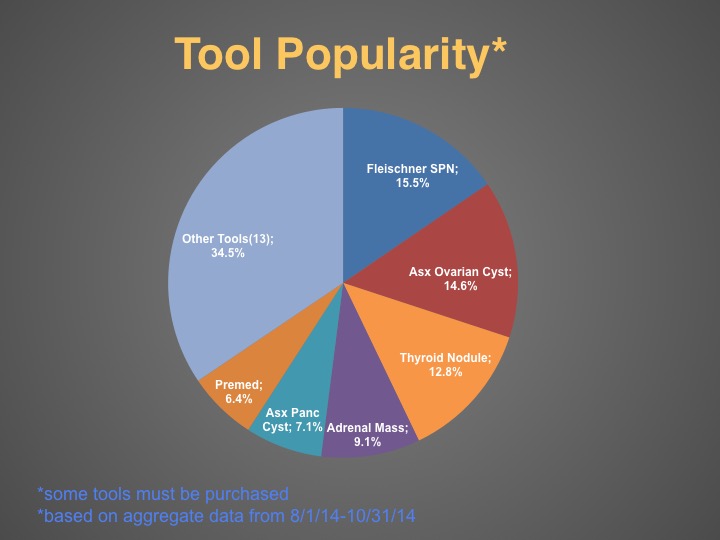

Interestingly, the two of the three most popular tools were both published WAY back in 2005! The top tool is the Fleischner Society Criteria for solitary pulmonary nodules, which supports the fact that many radiologists are probably not fully memorizing even the simplest of guidelines (including myself).

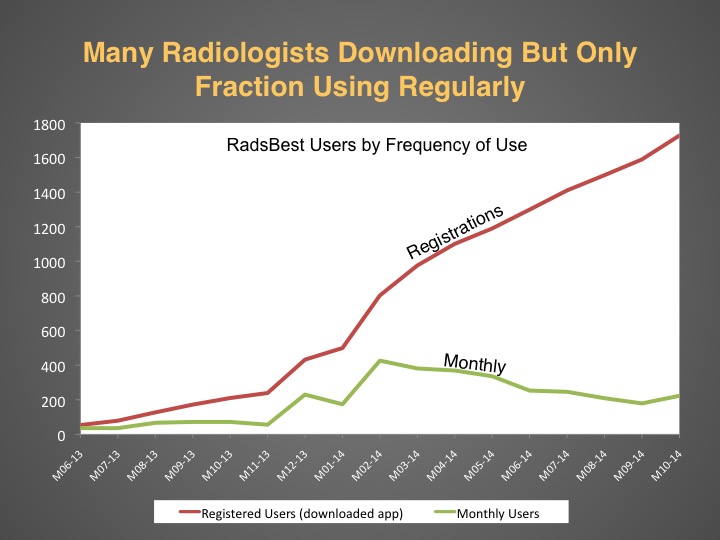

Importantly, there are quite a few radiologists who download the app but do not actually use it much beyond an initial perusal. This drop off in user activity is a common phenomenon with any product but still provides insight. Why there is such a dropoff in user activity?

Most obvious explanation for the gap between registered users and “active” users is that the app, or what it does, is just not that useful or desirable…or the app doesn’t work well. This is why we are extremely interested in feedback from our users (both frequent users and users who never use it). We are always working on improvements that will make the app an indispensable tool.

But if you think about it a bit more, there is another factor to explain that drop off in activity.

RadsBest is really the only available app that does what it does. Management decision support for the practicing radiologist. Therefore, it is reasonable to assume that the drop off in usage is also a coarse surrogate for how much radiologists are actually adopting these management guidelines in their daily practice by putting those recommendations into their reports.

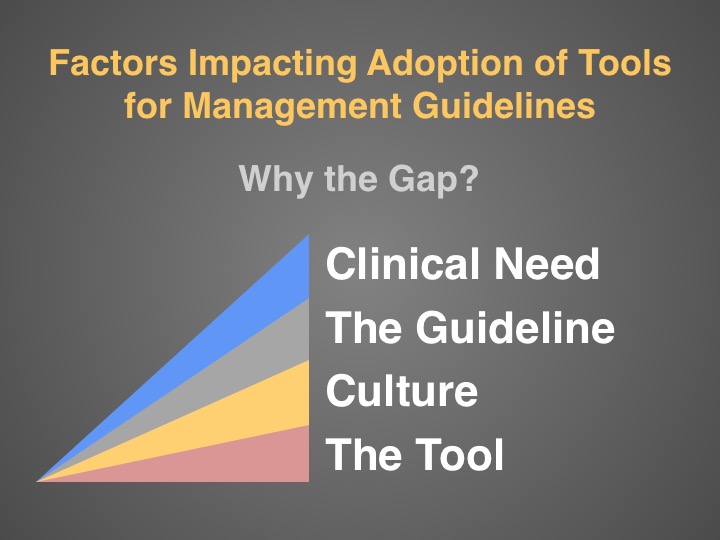

Here is a simplified breakdown of the factors that affect the adoption of a management decision support tool like RadsBest.

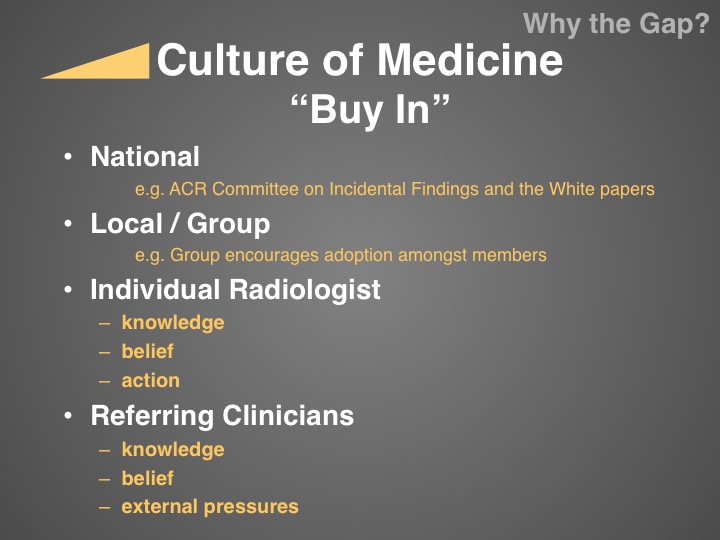

This is probably the most important slide of this talk. The “Buy In” I believe is the biggest issue facing radiology when it comes to implementation of a more standardized method of findings management. You need “buy-in” from your local radiologist, who has to make a conscious decision to utilize management guidelines in each and every report. But, more importantly, you need “buy-in” from your clinicians as well…and this is not just a philosophical “buy-in”, there are often financial externalities that complicate matters. Where I practice, the referring physicians are under intense pressure from their IPAs to cut costs, including imaging. They may not agree that pulmonary nodules should be followed at the same frequency as that dictated by the Fleischner Society because that will result in a lot of CT scans that need to be paid for.

Also, a lot of the guidelines that are currently being advocated by the American College of Radiology (ACR) are just not known to our referrers. It is a double-edged sword but many of these guidelines were not created in conjunction with other stakeholder medical specialties. This is an obvious factor in getting national level “buy-in”. That is the level of support that would be needed in order to get widespread adoption of guidelines such as these.

An app like RadsBest (or anything that provides a similar function) can actually help to drive the culture shift within medicine. It can do so by not only making it easier to use the guidelines, thereby diminishing the barrier to entry, but also by making the guidelines more visible. There are many guidelines collected together within the app and I would bet that most radiologists were not aware that they all existed.

We are working hard to make a better product. We have great ideas for improvements for the future and really welcome feedback from our users on how we can make the app even better and even more useful. Please drop us a line through the feedback link in the app or leave a comment below.

RK